The benefits of using AI and algorithms to identify possible suicide risks and thus being able to provide support and help save lives are undoubtedly huge. However, there are obviously a few key factors that need to be considered.

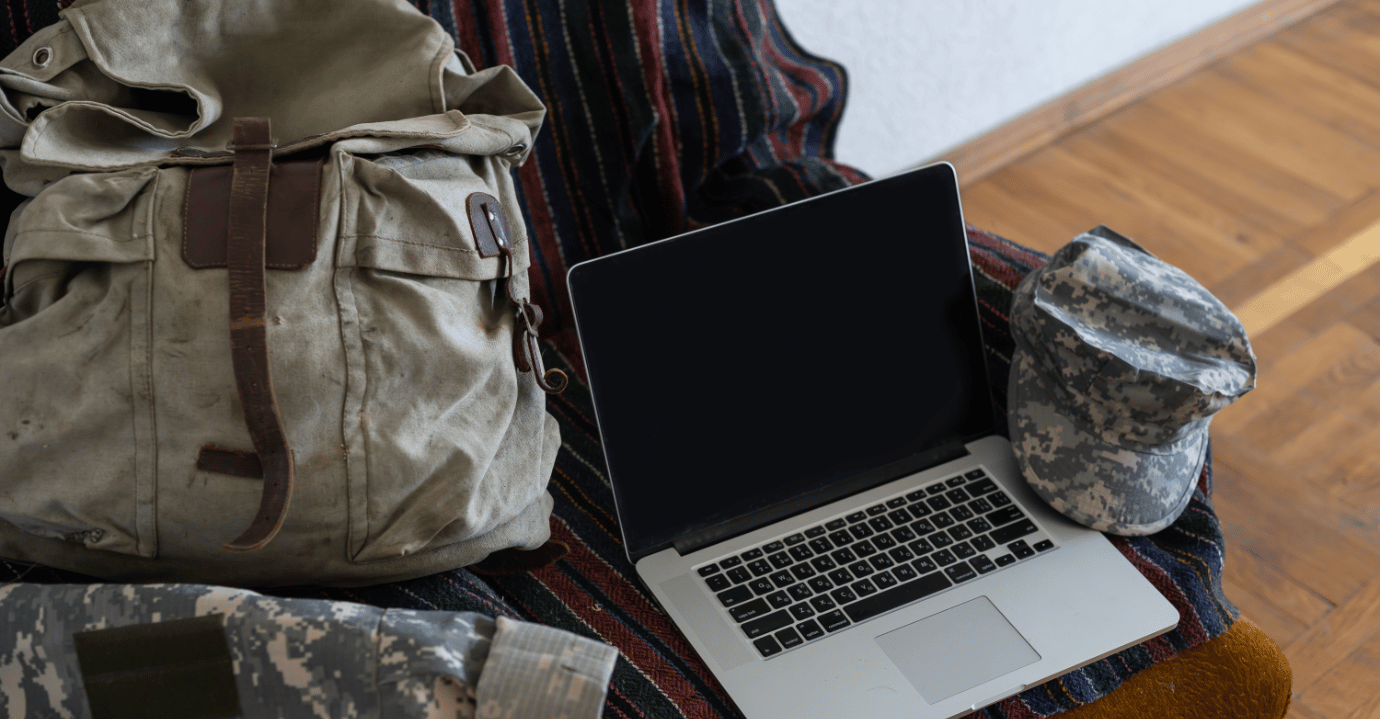

Accessing data of the deceased, in many instances, affords less privacy protection, but gaining access to the data from messaging, web browsing history, and other private activities or interactions would require extensive management of consents for each data source, assuming those who enter service will have the same level of privacy protection as civilians. Perhaps there will need to be legislation addressing such broad consent rights, balancing privacy rights with the public’s interest in suicide prevention.

If there is an opt-in mechanism, what restrictions, if any, should there be on the use of the data beyond suicide prevention, and how should such limitations be communicated to the servicemember to encourage participation? Should the data be admissible for all types of legal proceedings? Should tech companies be afforded limited liability in exchange for their participation, bearing in mind the overarching goal?

Or should we collectively take the view that it’s better to know that somebody’s looking out for you, even if they might get it wrong from time to time? After all, most of us consent to the use of our data by tech giants like Google and Meta in exchange for ‘free’ access to tools and services. Is it, therefore, reasonable – especially in a climate where it’s become more common to talk about mental health – to consent to sharing data from our private lives if it can contribute to the important goal of saving lives?